Question # 1

If your AWS Management Console browser does not show that you are logged in to an

AWS account, close the browser and relaunch the

console by using the AWS Management Console shortcut from the VM desktop.

If the copy-paste functionality is not working in your environment, refer to the instructions

file on the VM desktop and use Ctrl+C, Ctrl+V or Command-C , Command-V.

Configure Amazon EventBridge to meet the following requirements.

1. use the us-east-2 Region for all resources,

2. Unless specified below, use the default configuration settings.

3. Use your own resource naming unless a resource

name is specified below.

4. Ensure all Amazon EC2 events in the default event

bus are replayable for the past 90 days.

5. Create a rule named RunFunction to send the exact message every 1 5 minutes to an

existing AWS Lambda function named LogEventFunction.

6. Create a rule named SpotWarning to send a notification to a new standard Amazon SNS

topic named TopicEvents whenever an Amazon EC2

Spot Instance is interrupted. Do NOT create any topic subscriptions. The notification must

match the following structure:

Input Path:

{“instance” : “$.detail.instance-id”}

Input template:

“ The EC2 Spot Instance has been on account. |

Explanation:

Here are the steps to configure Amazon EventBridge to meet the above requirements:

Log in to the AWS Management Console by using the AWS Management Console

shortcut from the VM desktop. Make sure that you are logged in to the desired

AWS account.

Go to the EventBridge service in the us-east-2 Region.

In the EventBridge service, navigate to the "Event buses" page.

Click on the "Create event bus" button.

Give a name to your event bus, and select "default" as the event source type.

Navigate to "Rules" page and create a new rule named "RunFunction"

In the "Event pattern" section, select "Schedule" as the event source and set the

schedule to run every 15 minutes.

In the "Actions" section, select "Send to Lambda" and choose the existing AWS

Lambda function named "LogEventFunction"

Create another rule named "SpotWarning"

In the "Event pattern" section, select "EC2" as the event source, and filter the

events on "EC2 Spot Instance interruption"

In the "Actions" section, select "Send to SNS topic" and create a new standard

Amazon SNS topic named "TopicEvents"

In the "Input Transformer" section, set the Input Path to {“instance” :

“$.detail.instance-id”} and Input template to “The EC2 Spot Instance

has been interrupted on account.

Now all Amazon EC2 events in the default event bus will be replayable for past 90

days.

Note:

You can use the AWS Management Console, AWS CLI, or SDKs to create and

manage EventBridge resources.

You can use CloudTrail event history to replay events from the past 90 days.

You can refer to the AWS EventBridge documentation for more information on how

to configure and use the service: https://aws.amazon.com/eventbridge/

Question # 2

You need to update an existing AWS CloudFormation stack. If needed, a copy to the

CloudFormation template is available in an Amazon SB bucket named cloudformationbucket.

1. Use the us-east-2 Region for all resources.

2. Unless specified below, use the default configuration settings.

3. update the Amazon EQ instance named Devinstance by making the following changes

to the stack named 1700182:

a) Change the EC2 instance type to us-east-t2.nano.

b) Allow SSH to connect to the EC2 instance from the IP address range

192.168.100.0/30.

c) Replace the instance profile IAM role with IamRoleB.

4. Deploy the changes by updating the stack using the CFServiceR01e role.

5. Edit the stack options to prevent accidental deletion.

6. Using the output from the stack, enter the value of the Prodlnstanceld in the text box

below: |

Explanation:

Here are the steps to update an existing AWS CloudFormation stack:

Log in to the AWS Management Console and navigate to the CloudFormation

service in the us-east-2 Region.

Find the existing stack named 1700182 and click on it.

Click on the "Update" button.

Choose "Replace current template" and upload the updated CloudFormation

template from the Amazon S3 bucket named "cloudformation-bucket"

In the "Parameter" section, update the EC2 instance type to us-east-t2.nano and

add the IP address range 192.168.100.0/30 for SSH access.

Replace the instance profile IAM role with IamRoleB.

In the "Capabilities" section, check the checkbox for "IAM Resources"

Choose the role CFServiceR01e and click on "Update Stack"

Wait for the stack to be updated.

Once the update is complete, navigate to the stack and click on the "Stack

options" button, and select "Prevent updates to prevent accidental deletion"

To get the value of the Prodlnstanceld , navigate to the "Outputs" tab in the

CloudFormation stack and find the key "Prodlnstanceld". The value corresponding

to it is the value that you need to enter in the text box below.

Note:

You can use AWS CloudFormation to update an existing stack.

You can use the AWS CloudFormation service role to deploy updates.

You can refer to the AWS CloudFormation documentation for more information on

how to update and manage stacks: https://aws.amazon.com/cloudformation/

Question # 3

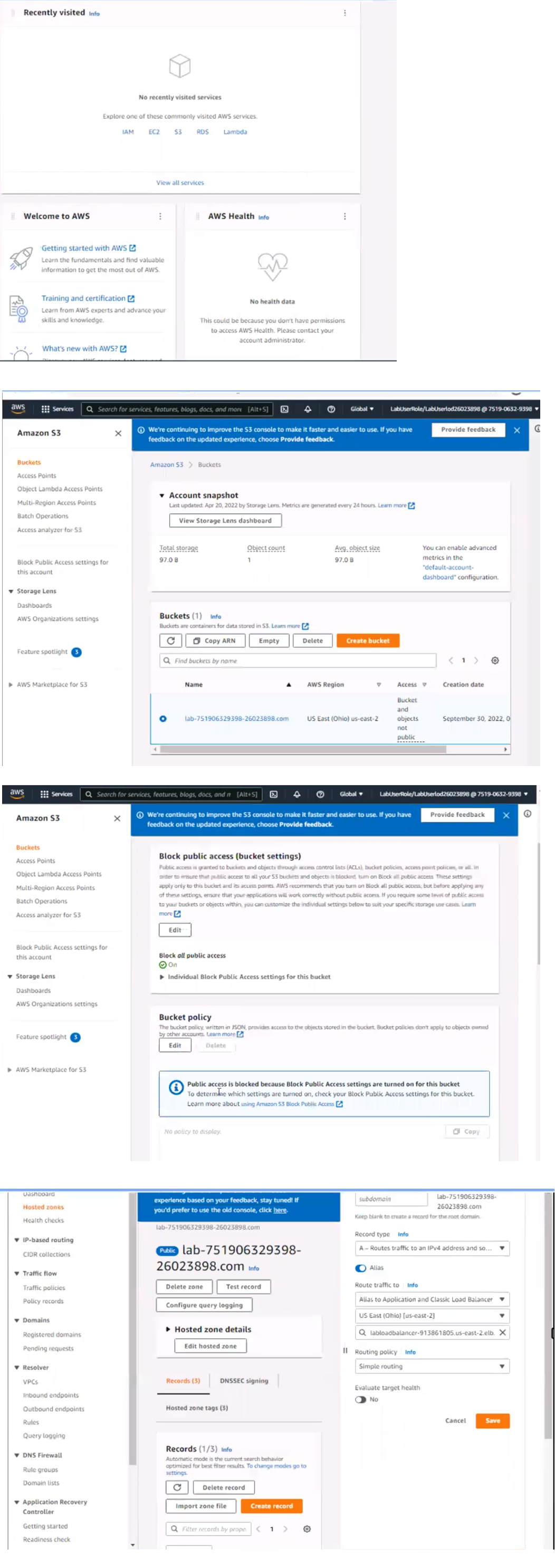

A webpage is stored in an Amazon S3 bucket behind an Application Load Balancer (ALB).

Configure the SS bucket to serve a static error page in the event of a failure at the primary

site.

1. Use the us-east-2 Region for all resources.

2. Unless specified below, use the default configuration settings.

3. There is an existing hosted zone named lab-

751906329398-26023898.com that contains an A record with a simple routing policy that

routes traffic to an existing ALB.

4. Configure the existing S3 bucket named lab-751906329398-26023898.com as a static

hosted website using the object named index.html as the index document

5. For the index-html object, configure the S3 ACL to allow for public read access. Ensure

public access to the S3 bucketjs allowed.

6. In Amazon Route 53, change the A record for domain lab-751906329398-26023898.com

to a primary record for a failover routing policy. Configure the record so that it evaluates the

health of the ALB to determine failover.

7. Create a new secondary failover alias record for the domain lab-751906329398-

26023898.com that routes traffic to the existing 53 bucket. |

Explanation:

Here are the steps to configure an Amazon S3 bucket to serve a static error page in the

event of a failure at the primary site:

Log in to the AWS Management Console and navigate to the S3 service in the useast-

2 Region.

Find the existing S3 bucket named lab-751906329398-26023898.com and click on

it.

In the "Properties" tab, click on "Static website hosting" and select "Use this bucket

to host a website".

In "Index Document" field, enter the name of the object that you want to use as the

index document, in this case, "index.html"

In the "Permissions" tab, click on "Block Public Access", and make sure that "Block

all public access" is turned OFF.

Click on "Bucket Policy" and add the following policy to allow public read access:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::lab-751906329398-26023898.com/*"

}

]

}

Now navigate to the Amazon Route 53 service, and find the existing hosted zone

named lab-751906329398-26023898.com.

Click on the "A record" and update the routing policy to "Primary - Failover" and

add the existing ALB as the primary record.

Click on "Create Record" button and create a new secondary failover alias record

for the domain lab-751906329398-26023898.com that routes traffic to the existing

S3 bucket.

Now, when the primary site (ALB) goes down, traffic will be automatically routed to

the S3 bucket serving the static error page.

Note:

You can use CloudWatch to monitor the health of your ALB.

You can use Amazon S3 to host a static website.

You can use Amazon Route 53 for routing traffic to different resources based on

health checks.

You can refer to the AWS documentation for more information on how to configure

and use these services:

Question # 4

| A SysOps administrator manages policies for many AWS member accounts in an AWS

Organizations structure. Administrators on other teams have access to the account root

user credentials of the member accounts. The SysOps administrator must prevent all

teams, including their administrators, from using Amazon DynamoDB. The solution must

not affect the ability of the teams to access other AWS services.

Which solution will meet these requirements? |

| A. In all member accounts, configure 1AM policies that deny access to all DynamoDB

resources for all users, including the root user.

| | B. Create a service control policy (SCP) in the management account to deny all

DynamoDB actions. Apply the SCP to the root of the organization

| | C. In all member accounts, configure 1AM policies that deny

AmazonDynamoDBFullAccess to all users, including the root user.

| | D. Remove the default service control policy (SCP) in the management account. Create a

replacement SCP that includes a single statement that denies all DynamoDB actions. |

B. Create a service control policy (SCP) in the management account to deny all

DynamoDB actions. Apply the SCP to the root of the organization

Explanation:

To prevent all teams within an AWS Organizations structure from using Amazon

DynamoDB while allowing access to other AWS services, the most effective solution is to

use a Service Control Policy (SCP). SCPs apply at the organization, organizational unit

(OU), or account level and can override individual IAM policies, including the root user's

permissions: -

B: Create a service control policy (SCP) in the management account to deny all

DynamoDB actions. Apply the SCP to the root of the organization. This policy will

effectively block DynamoDB actions across all member accounts without affecting

the ability to access other AWS services. SCPs are powerful tools for centrally

managing permissions in AWS Organizations and can enforce policy compliance

across all accounts. Further information on SCPs and their usage can be found in

the AWS documentation on Service Control Policies AWS Service Control

Policies.

Question # 5

| A team of developers is using several Amazon S3 buckets as centralized repositories.

Users across the world upload large sets of files to these repositories. The development

team's applications later process these files.

A SysOps administrator sets up a new S3 bucket. DOC-EXAMPLE-BUCKET, to support a

new workload. The new S3 bucket also receives regular uploads of large sets of files from

users worldwide. When the new S3 bucket is put into production, the upload performance

from certain geographic areas is lower than the upload performance that the existing S3

buckets provide.

What should the SysOps administrator do to remediate this issue? |

| A. Provision an Amazon ElasliCache for Redis cluster for the new S3 bucket. Provide the

developers with the configuration endpoint of the cluster for use in their API calls.

| | B. Add the new S3 bucket to a new Amazon CloudFront distribution. Provide the

developers with the domain name of the new distribution for use in their API calls.

| | C. Enable S3 Transfer Acceleration for the new S3 bucket. Verify that the developers are

using the DOC-EXAMPLE-BUCKET.s3-accelerate.amazonaws.com endpoint name in their

API calls.

| | D. Use S3 multipart upload for the new S3 bucket. Verify that the developers are using

Region-specific S3 endpoint names such as D0C-EXAMPLE-BUCKET.s3.

[RegionJ.amazonaws.com in their API calls. |

C. Enable S3 Transfer Acceleration for the new S3 bucket. Verify that the developers are

using the DOC-EXAMPLE-BUCKET.s3-accelerate.amazonaws.com endpoint name in their

API calls.

Explanation:

For improving upload performance globally for an Amazon S3 bucket, enabling S3 Transfer

Acceleration is the best solution. This service optimizes file transfers to S3 using Amazon

CloudFront's globally distributed edge locations. After enabling this feature, uploads to the

S3 bucket are first routed to an AWS edge location and then transferred to S3 over an

optimized network path. Option C is correct, and the developers should use the provided

accelerate endpoint in their API calls. For more details, consult the AWS documentation on

S3 Transfer Acceleration Amazon S3 Transfer Acceleration.

Question # 6

| A SysOps administrator recently configured Amazon S3 Cross-Region Replication on an

S3 bucket

Which of the following does this feature replicate to the destination S3 bucket by default? |

| A. Objects in the source S3 bucket for which the bucket owner does not have permissions

| | B. Objects that are stored in S3 Glacier

| | C. Objects that existed before replication was configured

| | D. Object metadata |

D. Object metadata

Explanation:

Amazon S3 Cross-Region Replication (CRR) is a feature that automatically replicates

objects across AWS regions. When CRR is configured, certain aspects are replicated by

default, and some are not. Here are the details: -

Objects in the source S3 bucket for which the bucket owner does not have

permissions: CRR does not replicate objects for which the bucket owner does not

have permissions.

-

Objects that are stored in S3 Glacier: Objects in the S3 Glacier storage class are

not replicated by CRR.

-

Objects that existed before replication was configured: Only objects created or

modified after the replication configuration will be replicated. Objects that existed

before the configuration are not replicated by default.

-

Object metadata: CRR replicates the object metadata along with the object to

ensure that the replica in the destination bucket is as accurate as possible.

Question # 7

| A company needs to automatically monitor an AWS account for potential unauthorized

AWS Management Console logins from multiple geographic locations.

Which solution will meet this requirement? |

| A. Configure Amazon Cognito to detect any compromised 1AM credentials.

| | B. Set up Amazon Inspector. Scan and monitor resources for unauthorized logins.

| | C. Set up AWS Config. Add the iam-policy-blacklisted-check managed rule to the account.

| | D. Configure Amazon GuardDuty to monitor the

UnauthorizedAccess:IAMUser/ConsoleLoginSuccess finding. |

D. Configure Amazon GuardDuty to monitor the

UnauthorizedAccess:IAMUser/ConsoleLoginSuccess finding.

Explanation: Amazon GuardDuty is a threat detection service that continuously monitors

for malicious activity and unauthorized behavior to protect AWS accounts and workloads. It

provides detailed monitoring for unauthorized access attempts, including console login

attempts from unusual locations.

Question # 8

| A company is creating a new multi-account architecture. A Sysops administrator must

implement a login solution to centrally manage user access and permissions across all AWS accounts. The solution must be integrated with AWS Organizations and must be connected to a third-party Security Assertion Markup Language (SAML) 2.0 identity provider (IdP).

What should the SysOps administrator do to meet these requirements? |

| A. Configure an Amazon Cognito user pool. Integrate the user pool with the third-party IdP. | | B. Enable and configure AWS Single Sign-On with the third-party IdP.

| | C. Federate the third-party IdP with AWS Identity and Access Management (IAM) for each

AWS account in the organization.

| | D. Integrate the third-party IdP directly with AWS Organizations. |

B. Enable and configure AWS Single Sign-On with the third-party IdP.

AWS Single Sign-On (AWS SSO) provides a centralized interface to manage SSO access

to multiple AWS accounts and business applications. AWS SSO integrates with AWS

Organizations and supports SAML 2.0 identity providers, making it an ideal solution for

centrally managing user access and permissions across multiple AWS accounts.

Get 485 AWS Certified SysOps Administrator - Associate (SOA-C02) questions Access in less then $0.12 per day.

Amazon Web Services Bundle 1:

1 Month PDF Access For All Amazon Web Services Exams with Updates

$200

$800

Buy Bundle 1

Amazon Web Services Bundle 2:

3 Months PDF Access For All Amazon Web Services Exams with Updates

$300

$1200

Buy Bundle 2

Amazon Web Services Bundle 3:

6 Months PDF Access For All Amazon Web Services Exams with Updates

$450

$1800

Buy Bundle 3

Amazon Web Services Bundle 4:

12 Months PDF Access For All Amazon Web Services Exams with Updates

$600

$2400

Buy Bundle 4

Disclaimer: Fair Usage Policy - Daily 5 Downloads

AWS Certified SysOps Administrator - Associate (SOA-C02) Test Dumps

Exam Code: SOA-C02

Exam Name: AWS Certified SysOps Administrator - Associate (SOA-C02)

- 90 Days Free Updates

- Amazon Web Services Experts Verified Answers

- Printable PDF File Format

- SOA-C02 Exam Passing Assurance

Get 100% Real SOA-C02 Exam Dumps With Verified Answers As Seen in the Real Exam. AWS Certified SysOps Administrator - Associate (SOA-C02) Exam Questions are Updated Frequently and Reviewed by Industry TOP Experts for Passing AWS Certified Associate Exam Quickly and Hassle Free.

Amazon Web Services SOA-C02 Test Dumps

Struggling with AWS Certified SysOps Administrator - Associate (SOA-C02) preparation? Get the edge you need! Our carefully created SOA-C02 test dumps give you the confidence to pass the exam. We offer:

1. Up-to-date AWS Certified Associate practice questions: Stay current with the latest exam content.

2. PDF and test engine formats: Choose the study tools that work best for you.

3. Realistic Amazon Web Services SOA-C02 practice exam: Simulate the real exam experience and boost your readiness.

Pass your AWS Certified Associate exam with ease. Try our study materials today!

SOA-C02 Practice Test Details

375 Single Choice Questions

47 Multiple Choice Questions

3 Simulations Questions

Official AWS Certified SysOps Administrator Associate exam info is available on Amazon website at https://aws.amazon.com/certification/certified-sysops-admin-associate/

Prepare your AWS Certified Associate exam with confidence!We provide top-quality SOA-C02 exam dumps materials that are:

1. Accurate and up-to-date: Reflect the latest Amazon Web Services exam changes and ensure you are studying the right content.

2. Comprehensive Cover all exam topics so you do not need to rely on multiple sources.

3. Convenient formats: Choose between PDF files and online AWS Certified SysOps Administrator - Associate (SOA-C02) practice questions for easy studying on any device.

Do not waste time on unreliable SOA-C02 practice test. Choose our proven AWS Certified Associate study materials and pass with flying colors. Try Dumps4free AWS Certified SysOps Administrator - Associate (SOA-C02) 2024 material today!

-

Assurance

AWS Certified SysOps Administrator - Associate (SOA-C02) practice exam has been updated to reflect the most recent questions from the Amazon Web Services SOA-C02 Exam.

-

Demo

Try before you buy! Get a free demo of our AWS Certified Associate exam dumps and see the quality for yourself. Need help? Chat with our support team.

-

Validity

Our Amazon Web Services SOA-C02 PDF contains expert-verified questions and answers, ensuring you're studying the most accurate and relevant material.

-

Success

Achieve SOA-C02 success! Our AWS Certified SysOps Administrator - Associate (SOA-C02) exam questions give you the preparation edge.

If you have any question then contact our customer support at live chat or email us at support@dumps4free.com.

|