- Email support@dumps4free.com

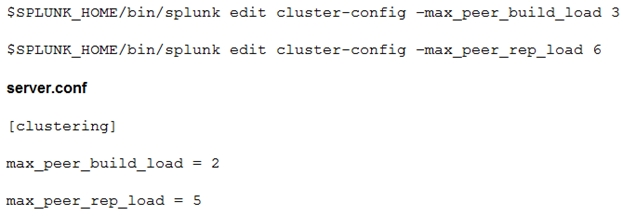

What should be considered when running the following CLI commands with a goal of accelerating an index cluster migration to new hardware?

A. Data ingestion rate

B. Network latency and storage IOPS

C. Distance and location

D. SSL data encryption

Explanation: Network latency is the time it takes for data to travel from one point to

another, and storage IOPS is the number of input/output operations per second. These

factors can affect the performance of the migration and should be taken into account when

planning and executing the migration.

The CLI commands that you have shown in the image are used to adjust the maximum

load that a peer node can handle during bucket replication and rebuilding. These settings

can affect the performance and efficiency of an index cluster migration to new hardware.

Therefore, some of the factors that you should consider when running these commands

are:

Data ingestion rate: The higher the data ingestion rate, the more buckets will be

created and need to be replicated or rebuilt across the cluster. This will increase

the load on the peer nodes and the network bandwidth. You might want to lower

the max_peer_build_load and max_peer_rep_load settings to avoid overloading

the cluster during migration.

Network latency and storage IOPS: The network latency and storage IOPS are

measures of how fast the data can be transferred and stored between the peer

nodes. The lower these values are, the longer it will take for the cluster to replicate

or rebuild buckets. You might want to increase the max_peer_build_load and

max_peer_rep_load settings to speed up the migration process, but not too high

that it causes errors or timeouts.

Distance and location: The distance and location of the peer nodes can also affect

the network latency and bandwidth. If the peer nodes are located in different

geographic regions or data centers, the data transfer might be slower and more

expensive than if they are in the same location. You might want to consider using

site replication factor and site search factor settings to optimize the cluster for

multisite deployment.

SSL data encryption: If you enable SSL data encryption for your cluster, it will add

an extra layer of security for your data, but it will also consume more CPU

resources and network bandwidth. This might slow down the data transfer and

increase the load on the peer nodes. You might want to balance the trade-off

between security and performance when using SSL data encryption.

A customer with a large distributed environment has blacklisted a large lookup from the search bundle to decrease the bundle size using distsearch.conf. After this change, when running searches utilizing the lookup that was blacklisted they see error messages in the Splunk Search UI stating the lookup file does not exist. What can the customer do to resolve the issue?

A. The search needs to be modified to ensure the lookup command specifies parameter local=true.

B. The blacklisted lookup definition stanza needs to be modified to specify setting allow_caching=true.

C. The search needs to be modified to ensure the lookup command specified parameter blacklist=false.

D. The lookup cannot be blacklisted; the change must be reverted.

Explanation: The customer can resolve the issue by modifying the blacklisted lookup definition stanza to specify setting allow_caching=true. This option allows the lookup to be cached on the search head and used for searches, without being included in the search bundle that is distributed to the indexers. This way, the customer can reduce the bundle size and avoid the error messages. Therefore, the correct answer is B, the blacklisted lookup definition stanza needs to be modified to specify setting allow_caching=true.

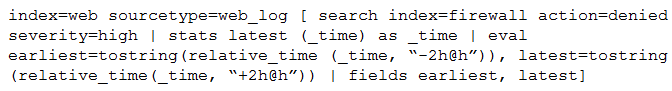

Consider the search shown below.

What is this search’s intended function?

A. To return all the web_log events from the web index that occur two hours before and after the most recent high severity, denied event found in the firewall index.

B. To find all the denied, high severity events in the firewall index, and use those events to further search for lateral movement within the web index.

C. To return all the web_log events from the web index that occur two hours before and after all high severity, denied events found in the firewall index.

D. To search the firewall index for web logs that have been denied and are of high severity.

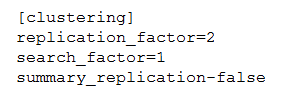

The customer has an indexer cluster supporting a wide variety of search needs, including

scheduled search, data model acceleration, and summary indexing. Here is an excerpt

from the cluster mater’s server.conf:

Which strategy represents the minimum and least disruptive change necessary to protect

the searchability of the indexer cluster in case of indexer failure?

A. Enable maintenance mode on the CM to prevent excessive fix-up and bring the failed indexer back online.

B. Leave replication_factor=2, increase search_factor=2 and enable summary_replication.

C. Convert the cluster to multi-site and modify the server.conf to be site_replication_factor=2, site_search_factor=2.

D. Increase replication_factor=3, search_factor=2 to protect the data, and allow there to always be a searchable copy.

Explanation: This is the minimum and least disruptive change necessary to protect the

searchability of the indexer cluster in case of indexer failure, because it ensures that there

are always at least two copies of each bucket in the cluster, one of which is searchable.

This way, if one indexer fails, the cluster can still serve search requests from the remaining

copy. Increasing the replication factor and search factor also improves the cluster’s

resiliency and availability. The other options are incorrect because they either do not protect the searchability of the

cluster, or they require more changes and disruptions to the cluster.

Option A is incorrect

because enabling maintenance mode on the CM does not prevent excessive fix-up, but

rather delays it until maintenance mode is disabled. Maintenance mode also prevents

searches from running on the cluster, which defeats the purpose of protecting searchability.

Option B is incorrect because leaving replication_factor=2 means that there is only one

searchable copy of each bucket in the cluster, which is not enough to protect searchability

in case of indexer failure. Enabling summary_replication does not help with this issue, as it

only applies to summary indexes, not all indexes.

Option C is incorrect because converting the cluster to multi-site requires a lot of changes and disruptions to the cluster, such as

reassigning site attributes to all nodes, reconfiguring network settings, and rebalancing

buckets across sites. It also does not guarantee that there will always be a searchable copy

of each bucket in each site, unless the site replication factor and site search factor are set

accordingly.

When setting up a multisite search head and indexer cluster, which nodes are required to declare site membership?

A. Search head cluster members, deployer, indexers, cluster master

B. Search head cluster members, deployment server, deployer, indexers, cluster master

C. All splunk nodes, including forwarders, must declare site membership

D. Search head cluster members, indexers, cluster master

Explanation: The internal Splunk authentication will take precedence over any external schemes, such as LDAP. This means that if a username exists in both $SPLUNK_HOME/etc/passwd and LDAP, the Splunk platform will attempt to log in the user using the native authentication first. If the authentication fails, and the failure is not due to a nonexistent local account, then the platform will not attempt to use LDAP to log in. If the failure is due to a nonexistent local account, then the Splunk platform will attempt a login using the LDAP authentication scheme.

| Page 4 out of 17 Pages |

| Previous |