- Email support@dumps4free.com

Topic 2: Misc. Questions

You need to configure an Apache Kafka instance to ingest data from an Azure Cosmos DB

Core (SQL) API account. The data from a container named telemetry must be added to a

Kafka topic named iot. The solution must store the data in a compact binary format.

Which three configuration items should you include in the solution? Each correct answer

presents part of the solution.

NOTE: Each correct selection is worth one point.

A.

"connector.class":

"com.azure.cosmos.kafka.connect.source.CosmosDBSourceConnector"

B.

"key.converter": "org.apache.kafka.connect.json.JsonConverter"

C.

"key.converter": "io.confluent.connect.avro.AvroConverter"

D.

"connect.cosmos.containers.topicmap": "iot#telemetry"

E.

"connect.cosmos.containers.topicmap": "iot"

F.

"connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSinkConnector"

"key.converter": "io.confluent.connect.avro.AvroConverter"

"connect.cosmos.containers.topicmap": "iot#telemetry"

"connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSinkConnector"

Explanation:

C: Avro is binary format, while JSON is text.

F: Kafka Connect for Azure Cosmos DB is a connector to read from and write data to Azure

Cosmos DB. The Azure Cosmos DB sink connector allows you to export data from Apache

Kafka topics to an Azure Cosmos DB database. The connector polls data from Kafka to

write to containers in the database based on the topics subscription.

D: Create the Azure Cosmos DB sink connector in Kafka Connect. The following JSON

body defines config for the sink connector.

Extract:

"connector.class": "com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector",

"key.converter": "org.apache.kafka.connect.json.AvroConverter"

"connect.cosmos.containers.topicmap": "hotels#kafka"

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/kafka-connector-sink

https://www.confluent.io/blog/kafka-connect-deep-dive-converters-serialization-explained/

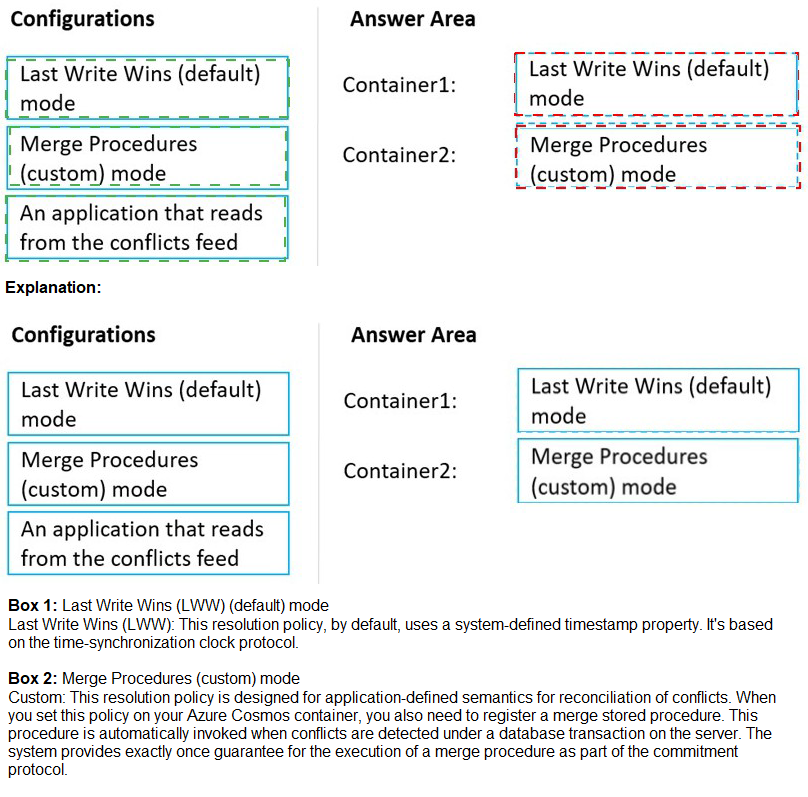

You have an Azure Cosmos DB Core (SQL) API account that is configured for multi-region

writes. The account contains a database that has two containers named container1 and

container2.

The following is a sample of a document in container1:

{

"customerId": 1234,

"firstName": "John",

"lastName": "Smith",

"policyYear": 2021

}

The following is a sample of a document in container2:

{

"gpsId": 1234,

"latitude": 38.8951,

"longitude": -77.0364

}

You need to configure conflict resolution to meet the following requirements:

For container1 you must resolve conflicts by using the highest value for policyYear.

For container2 you must resolve conflicts by accepting the distance closest to latitude:

40.730610 and longitude: -73.935242.

Administrative effort must be minimized to implement the solution.

What should you configure for each container? To answer, drag the appropriate

configurations to the correct containers. Each configuration may be used once, more than

once, or not at all. You may need to drag the split bar between panes or scroll to view

content.

NOTE: Each correct selection is worth one point.

You have an application named App1 that reads the data in an Azure Cosmos DB Core

(SQL) API account. App1 runs the same read queries every minute. The default

consistency level for the account is set to eventual.

You discover that every query consumes request units (RUs) instead of using the cache.

You verify the IntegratedCacheiteItemHitRate metric and the

IntegratedCacheQueryHitRate metric. Both metrics have values of 0.

You verify that the dedicated gateway cluster is provisioned and used in the connection string.

You need to ensure that App1 uses the Azure Cosmos DB integrated cache.

What should you configure?

A.

the indexing policy of the Azure Cosmos DB container

B.

the consistency level of the requests from App1

C.

the connectivity mode of the App1 CosmosClient

D.

the default consistency level of the Azure Cosmos DB account

the connectivity mode of the App1 CosmosClient

Explanation: Because the integrated cache is specific to your Azure Cosmos DB account

and requires significant CPU and memory, it requires a dedicated gateway node. Connect

to Azure Cosmos DB using gateway mode.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/integrated-cache-faq

You have the following query.

SELECT * FROM

WHERE c.sensor = "TEMP1"

AND c.value < 22

AND c.timestamp >= 1619146031231

You need to recommend a composite index strategy that will minimize the request units

(RUs) consumed by the query.

What should you recommend?

A.

a composite index for (sensor ASC, value ASC) and a composite index for (sensor ASC,

timestamp ASC)

B.

a composite index for (sensor ASC, value ASC, timestamp ASC) and a composite index

for (sensor DESC, value DESC, timestamp DESC)

C.

a composite index for (value ASC, sensor ASC) and a composite index for (timestamp

ASC, sensor ASC)

D.

a composite index for (sensor ASC, value ASC, timestamp ASC)

a composite index for (sensor ASC, value ASC) and a composite index for (sensor ASC,

timestamp ASC)

If a query has a filter with two or more properties, adding a composite index will improve

performance.

Consider the following query:

SELECT * FROM c WHERE c.name = “Tim” and c.age > 18

In the absence of a composite index on (name ASC, and age ASC), we will utilize a range

index for this query. We can improve the efficiency of this query by creating a composite

index for name and age.

Queries with multiple equality filters and a maximum of one range filter (such as >,<, <=,

>=, !=) will utilize the composite index.

Reference: https://azure.microsoft.com/en-us/blog/three-ways-to-leverage-compositeindexes-

in-azure-cosmos-db/

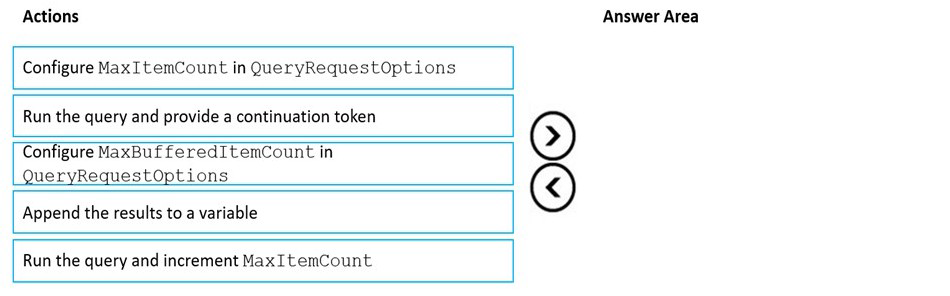

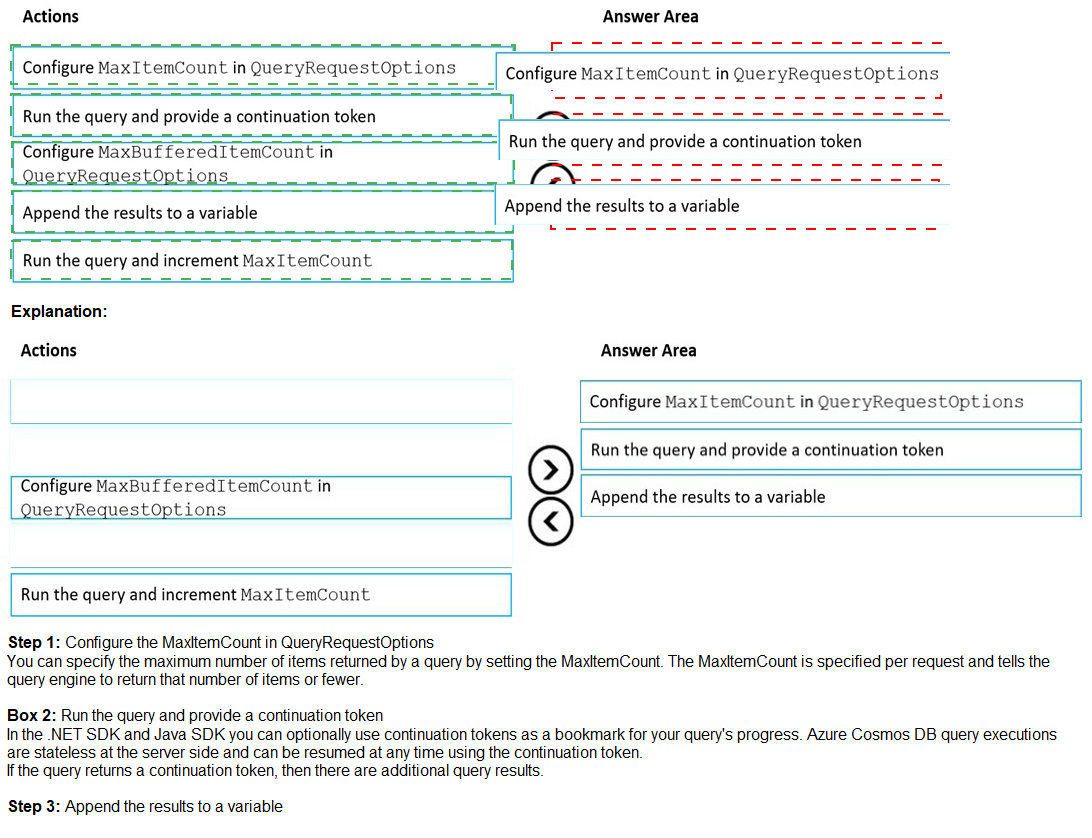

You have an app that stores data in an Azure Cosmos DB Core (SQL) API account The

app performs queries that return large result sets.

You need to return a complete result set to the app by using pagination. Each page of

results must return 80 items.

Which three actions should you perform in sequence? To answer, move the appropriate

actions from the list of actions to the answer area and arrange them in the correct order.

| Page 2 out of 11 Pages |

| Previous |