- Email support@dumps4free.com

Topic 3, Mix Questions

You plan to implement an Azure Data Lake Gen2 storage account.

You need to ensure that the data lake will remain available if a data center fails in the primary Azure region.

The solution must minimize costs.

Which type of replication should you use for the storage account?

A.

geo-redundant storage (GRS)

B.

zone-redundant storage (ZRS)

C.

locally-redundant storage (LRS)

D.

geo-zone-redundant storage (GZRS)

geo-redundant storage (GRS)

Explanation:

Geo-redundant storage (GRS) copies your data synchronously three times within a single

physical location in the primary region using LRS. It then copies your data asynchronously

to a single physical location in the secondary region.

Reference:

https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

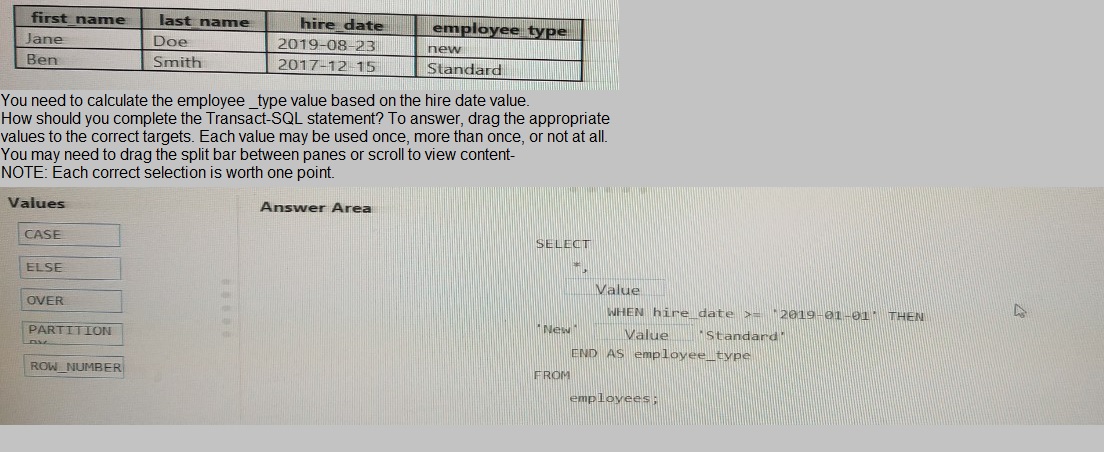

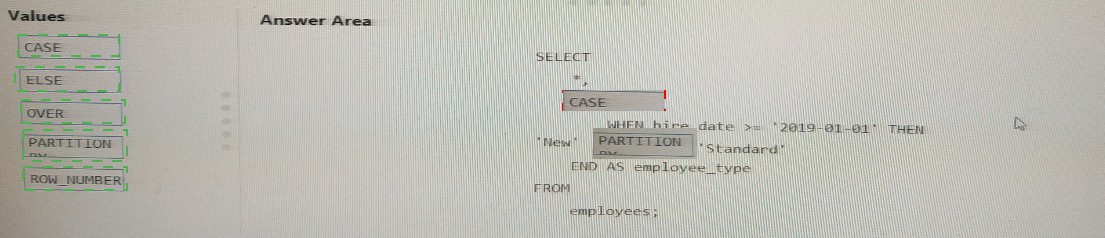

You have the following table named Employees

You have an enterprise-wide Azure Data Lake Storage Gen2 account. The data lake is

accessible only through an Azure virtual network named VNET1.

You are building a SQL pool in Azure Synapse that will use data from the data lake.

Your company has a sales team. All the members of the sales team are in an Azure Active

Directory group named Sales. POSIX controls are used to assign the Sales group access

to the files in the data lake.

You plan to load data to the SQL pool every hour.

You need to ensure that the SQL pool can load the sales data from the data lake.

Which three actions should you perform? Each correct answer presents part of the

solution.

NOTE: Each area selection is worth one point.

A.

Add the managed identity to the Sales group.

B.

Use the managed identity as the credentials for the data load process.

C.

Create a shared access signature (SAS).

D.

Add your Azure Active Directory (Azure AD) account to the Sales group.

E.

Use the snared access signature (SAS) as the credentials for the data load process.

F.

Create a managed identity.

Add the managed identity to the Sales group.

Add your Azure Active Directory (Azure AD) account to the Sales group.

Create a managed identity.

You have an enterprise data warehouse in Azure Synapse Analytics named DW1 on a server named Server1. You need to verify whether the size of the transaction log file for each distribution of DW1 is smaller than 160 GB.

What should you do?

A.

On the master database, execute a query against the

sys.dm_pdw_nodes_os_performance_counters dynamic management view.

B.

From Azure Monitor in the Azure portal, execute a query against the logs of DW1.

C.

On DW1, execute a query against the sys.database_files dynamic management view.

D.

Execute a query against the logs of DW1 by using the

Get-AzOperationalInsightSearchResult PowerShell cmdlet

On the master database, execute a query against the

sys.dm_pdw_nodes_os_performance_counters dynamic management view.

Explanation:

The following query returns the transaction log size on each distribution. If one of the log

files is reaching 160 GB, you should consider scaling up your instance or limiting your

transaction size.

- Transaction log size

SELECT

instance_name as distribution_db,

cntr_value*1.0/1048576 as log_file_size_used_GB,

pdw_node_id

FROM sys.dm_pdw_nodes_os_performance_counters

WHERE

instance_name like 'Distribution_%'

AND counter_name = 'Log File(s) Used Size (KB)'

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-managemonitor

A company purchases IoT devices to monitor manufacturing machinery. The company uses an IoT appliance to communicate with the IoT devices.

The company must be able to monitor the devices in real-time.

You need to design the solution.

What should you recommend?

A.

Azure Stream Analytics cloud job using Azure PowerShell

B.

Azure Analysis Services using Azure Portal

C.

Azure Data Factory instance using Azure Portal

D.

Azure Analysis Services using Azure PowerShell

Azure Stream Analytics cloud job using Azure PowerShell

Explanation:

Stream Analytics is a cost-effective event processing engine that helps uncover real-time

insights from devices, sensors, infrastructure, applications and data quickly and easily.

Monitor and manage Stream Analytics resources with Azure PowerShell cmdlets and

powershell scripting that execute basic Stream Analytics tasks.

Reference:

https://cloudblogs.microsoft.com/sqlserver/2014/10/29/microsoft-adds-iot-streaminganalytics-

data-production-and-workflow-services-to-azure/

| Page 8 out of 42 Pages |

| Previous |