- Email support@dumps4free.com

Create a Pod name Nginx-pod inside the namespace testing, Create a service for the Nginx-pod named nginx-svc, using the ingress of your choice, run the ingress on tls, secure port.

Explanation:

$ kubectl get ing -n

NAME HOSTS ADDRESS PORTS AGE

cafe-ingress cafe.com 10.0.2.15 80 25s

$ kubectl describe ing

Name: cafe-ingress

Namespace: default

Address: 10.0.2.15

Default backend: default-http-backend:80 (172.17.0.5:8080)

Rules:

Host Path Backends

---- ---- --------

cafe.com

/tea tea-svc:80 (

/coffee coffee-svc:80 (

Annotations:

kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"networking.k8s.io/v1","kind":"Ingress","metadata":{"annotations":{},"name":"c

afeingress","

namespace":"default","selfLink":"/apis/networking/v1/namespaces/default/ingress

es/cafeingress"},"

spec":{"rules":[{"host":"cafe.com","http":{"paths":[{"backend":{"serviceName":"teasvc","

servicePort":80},"path":"/tea"},{"backend":{"serviceName":"coffeesvc","

servicePort":80},"path":"/coffee"}]}}]},"status":{"loadBalancer":{"ingress":[{"ip":"169.48.

142.110"}]}}}

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CREATE 1m ingress-nginx-controller Ingress default/cafe-ingress

Normal UPDATE 58s ingress-nginx-controller Ingress default/cafe-ingress

$ kubectl get pods -n

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-67956bf89d-fv58j 1/1 Running 0 1m

$ kubectl logs -n

-------------------------------------------------------------------------------

NGINX Ingress controller

Release: 0.14.0

Build: git-734361d

Repository: https://github.com/kubernetes/ingress-nginx

-------------------------------------------------------------------------------

Create a new ServiceAccount named backend-sa in the existing namespace default, which has the capability to list the pods inside the namespace default. Create a new Pod named backend-pod in the namespace default, mount the newly created sa backend-sa to the pod, and Verify that the pod is able to list pods. Ensure that the Pod is running.

Explanation:

A service account provides an identity for processes that run in a Pod.

When you (a human) access the cluster (for example, using kubectl), you are authenticated

by the apiserver as a particular User Account (currently this is usually admin, unless your

cluster administrator has customized your cluster). Processes in containers inside pods can

also contact the apiserver. When they do, they are authenticated as a particular Service

Account (for example, default).

When you create a pod, if you do not specify a service account, it is automatically assigned

the default service account in the same namespace. If you get the raw json or yaml for a

pod you have created (for example, kubectl get pods/

You can access the API from inside a pod using automatically mounted service account

credentials, as described in Accessing the Cluster. The API permissions of the service

account depend on the authorization plugin and policy in use.

In version 1.6+, you can opt out of automounting API credentials for a service account by

setting automountServiceAccountToken: false on the service account:

apiVersion: v1

kind: ServiceAccount

metadata:

name: build-robot

automountServiceAccountToken: false

In version 1.6+, you can also opt out of automounting API credentials for a particular pod:

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

serviceAccountName: build-robot

automountServiceAccountToken: false

The pod spec takes precedence over the service account if both specify a automountServiceAccountToken value.

Create a network policy named allow-np, that allows pod in the namespace staging to

connect to port 80 of other pods in the same namespace.

Ensure that Network Policy:-

1. Does not allow access to pod not listening on port 80.

2. Does not allow access from Pods, not in namespace staging.

Explanation:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: network-policy

spec:

podSelector: {} #selects all the pods in the namespace deployed

policyTypes:

- Ingress

ingress:

- ports: #in input traffic allowed only through 80 port only

- protocol: TCP

port: 80

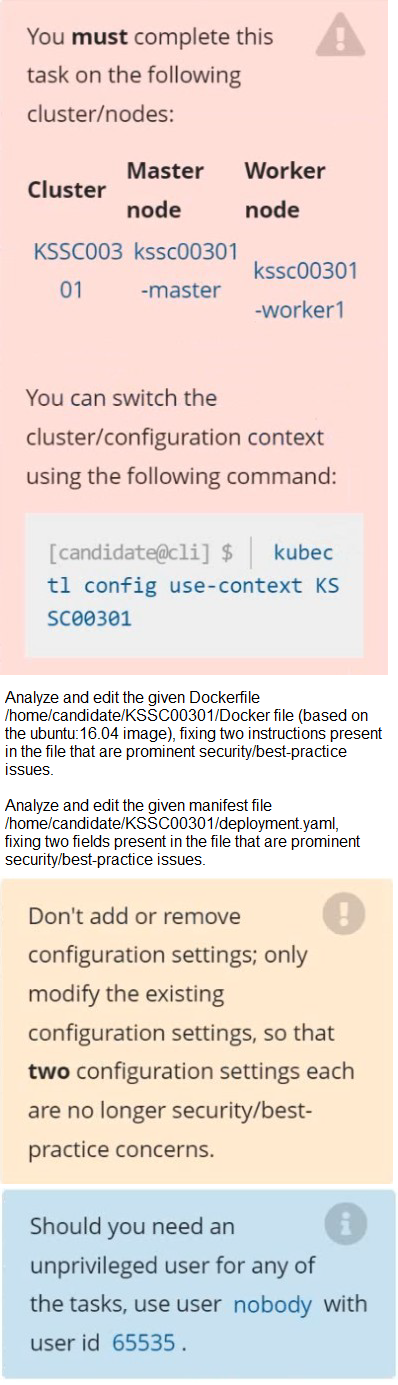

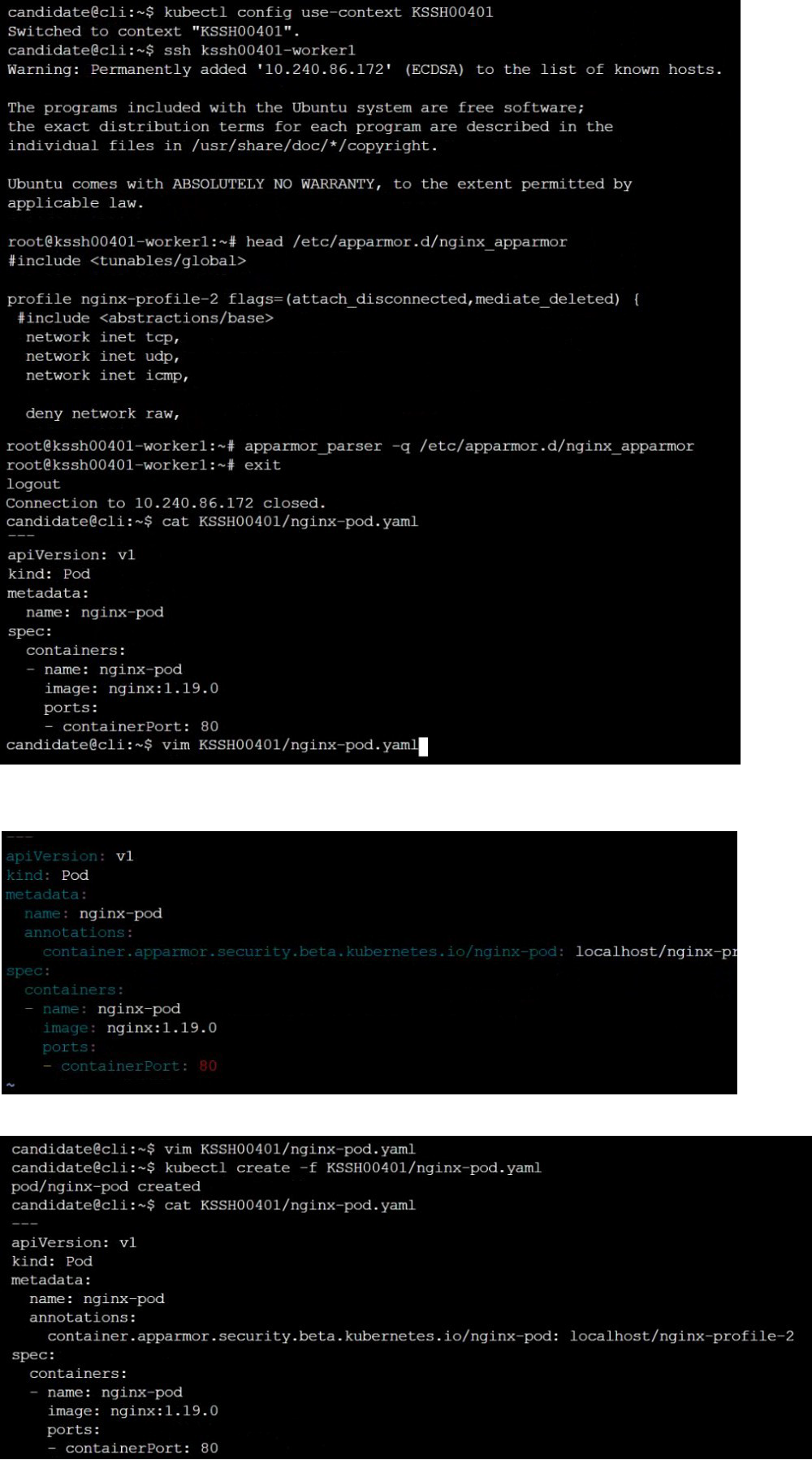

On the Cluster worker node, enforce the prepared AppArmor profile

#include

profile nginx-deny flags=(attach_disconnected) {

#include

file,

# Deny all file writes.

deny /** w,

}

EOF'

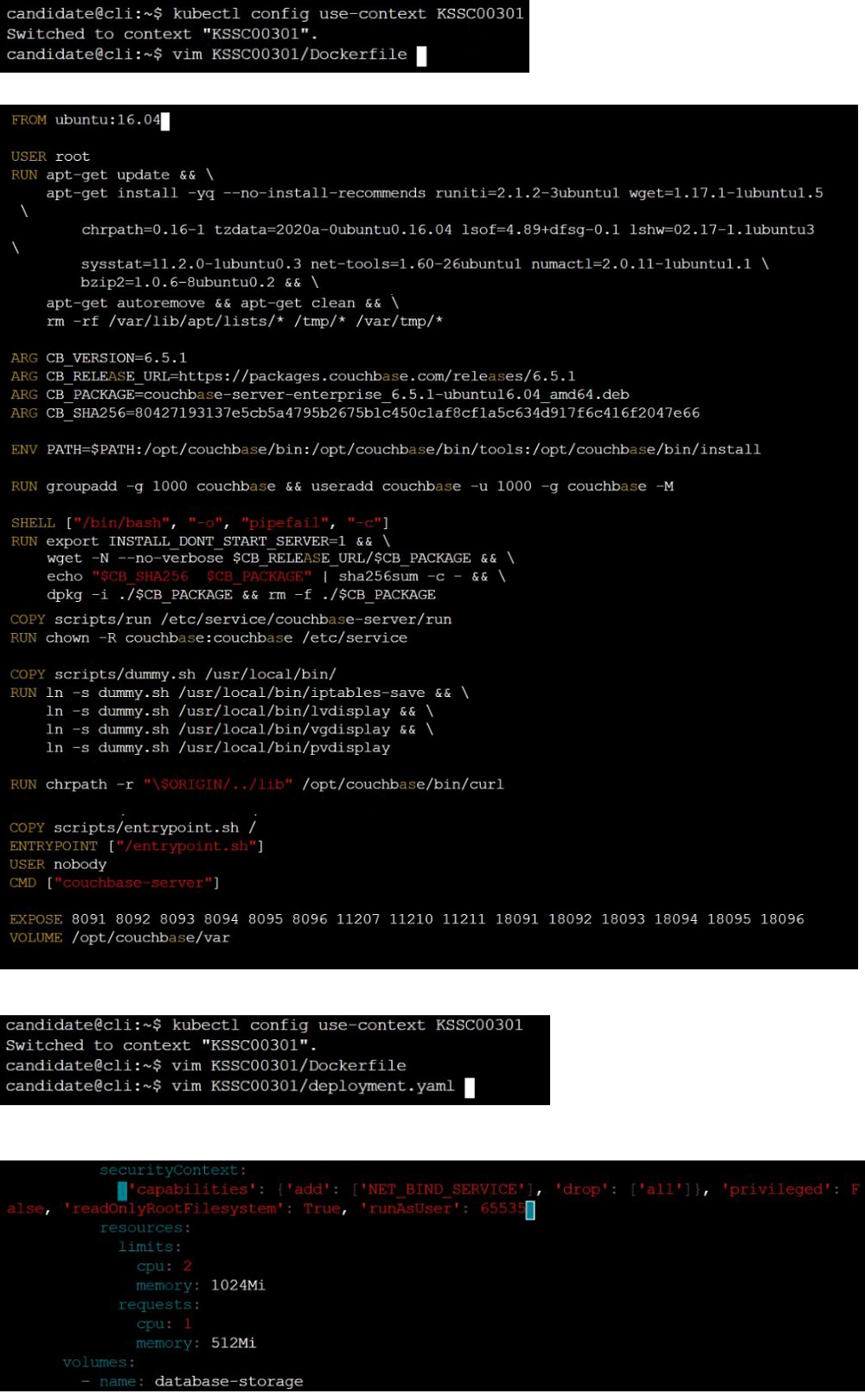

Edit the prepared manifest file to include the AppArmor profile.

apiVersion: v1

kind: Pod

metadata:

name: apparmor-pod

spec:

containers:

- name: apparmor-pod

image: nginx

Finally, apply the manifests files and create the Pod specified on it.

Verify: Try to make a file inside the directory which is restricted.

| Page 1 out of 10 Pages |